Product Experimentation: How Successful SaaS Teams Test, Learn, And Build Better Products

November 26, 2025 • 19 min read

Last Updated on February 6, 2026 by Sivan Kadosh

Product teams face more uncertainty today than ever before. New competitors appear overnight, user expectations shift quickly, and it has never been easier to build features that nobody truly needs. The teams that win are not the ones that ship the most, they are the ones that learn the fastest. Product experimentation gives SaaS companies a reliable way to validate ideas, reduce risk, and make confident decisions that drive measurable results.

This guide goes far deeper than the typical A B testing tutorials you find online. You will learn how experimentation works at different levels of maturity, how to design strong hypotheses, how to choose the right experiment type, and how to adapt your approach for different business models. You will also see common failure points, real examples, and practical steps to create an experimentation engine that consistently improves your product.

The trap of common sense: Why PMs must be humble

By definition, a Product Manager must be the humblest person in the room. A PM who feels they “know it all” represents a strategic risk capable of driving startups into millions in losses. Why? Because ultimately, we aren’t using common sense to predict user behavior, we are guessing. Our true power lies in knowing that we are guessing, and having the discipline to test those guesses against reality.

A recent experience illustrates this perfectly. I was working with the founder of a successful startup that had been stuck at a $9.99 price point for five years. He was adamant about raising the price by 20%, arguing that “customers understand inflation” and insisting there was “no doubt” that conversion rates would remain stable. After a long debate, we agreed to run a controlled test.

The result? A total collapse. Conversion rates (both Visit-to-Trial and Trial-to-Paid) plummeted to 10% of their baseline.

This story isn’t an anomaly. It is a textbook example of “Overconfidence Bias,” as described by Data Driven Investor, a psychological trap where leaders prioritize gut instinct over data. We see a nearly identical scenario in the famous Monetizely case study, where a company predicted minimal churn from a price hike but faced double the expected loss in reality.

Stop guessing. Start calculating.

Access our suite of calculators designed to help SaaS companies make data-driven decisions.

Free tool. No signup required.

The lesson is unambiguous: we can believe whatever we want, but as ProdPad emphasizes, until we perform rigorous “Assumption Testing,” our guesses are practically equivalent to gambling. The question is: Do you want to base your success on guesses, or on facts?

Key takeaways

- Product experimentation is a systematic approach to testing ideas, reducing risk, and improving product outcomes.

- High performing SaaS teams use a maturity model, not random tests. This helps them scale experimentation safely and consistently.

- Good experiments start with clear goals, strong hypotheses, correct metrics, and realistic sample sizes.

- Experimentation looks different across freemium, enterprise, and marketplace models.

- A structured experimentation culture improves activation, retention, engagement, and revenue.

- A fractional CPO can help teams build experimentation systems when they lack strategy, senior oversight or alignment.

What is product experimentation

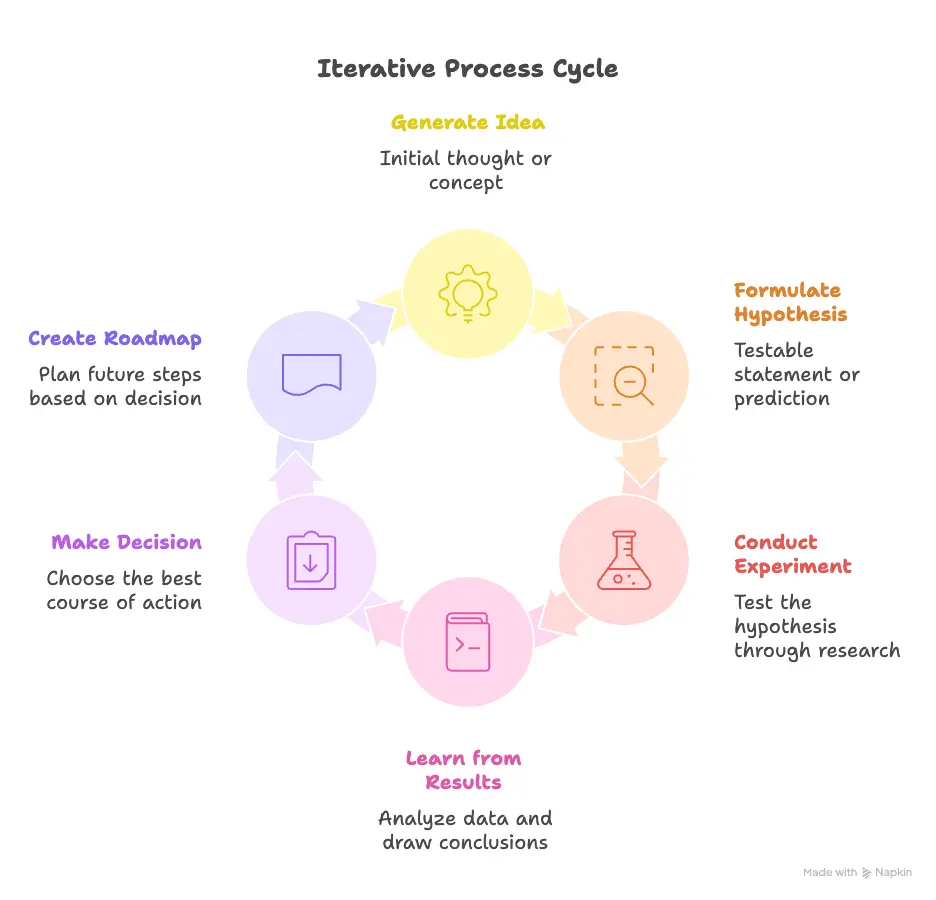

Product experimentation is the process of testing product changes in a controlled way to understand their real impact on user behavior and business metrics. Instead of relying on assumptions, you create evidence, learn faster, and avoid costly mistakes.

Experimentation is not guessing, shipping random features, or interpreting data with confirmation bias. It is not running ad hoc A B tests with no hypothesis or clear success metric. True experimentation is structured and intentional. It is a core capability that sits inside the product strategy, not outside it.

When done well, experimentation improves product quality, reduces wasted development time, strengthens cross functional alignment, and leads to better long term growth. It helps teams learn what actually drives activation, engagement and conversion instead of relying on intuition.

Why product experimentation matters in SaaS

SaaS products operate in environments filled with fast feedback loops, data rich usage patterns, competitive pressure and rapidly shifting user needs. Experimentation helps teams respond with clarity instead of noise.

Faster learning, lower risk

Experimentation helps you validate ideas early, so you avoid investing months into features users do not need. This is especially valuable for resource constrained SaaS teams that cannot afford to build blindly.

Better user experience

Experiments uncover friction that slows down onboarding, lowers activation, or decreases retention. Fixing these areas has direct revenue impact.

Improved decision making

Clear data helps the team agree on direction without personal opinions taking over. This creates healthier discussions and more predictable outcomes.

Stronger product led growth

Experimentation supports core PLG metrics, from sign up flows to paywall tests to product education steps.

The experimentation maturity model

Successful experimentation programs do not appear overnight. They evolve through stages of maturity, each with its own challenges, learning curves and required processes. Understanding these stages helps teams identify where they are today and how to grow systematically.

Stage 1: Ad hoc testing

Teams in this stage run experiments occasionally without structure. There is enthusiasm but no consistent methodology.

Typical signs:

- Random A B tests with unclear goals

- No documented hypotheses

- Experiments launched without sample size calculations

- Decisions based on gut feeling

- Lack of alignment between product, engineering and design

Risks:

- False positives

- Misleading results

- Low confidence in decisions

- Internal debates with no conclusion

Stage 2: Repeatable program

At this stage, teams introduce structure and process. There are templates, clearer hypotheses and a basic workflow everyone follows.

Typical signs:

- Hypotheses defined in a standard format

- Clear primary and secondary metrics

- Experiments tracked in a central repository

- Dedicated owner for experimentation

- Introduction of feature flags or basic testing tools

Benefits:

- More confidence in results

- Faster experiment cycles

- Fewer mistakes caused by poor design

Stage 3: Scalable experimentation

Here, experimentation becomes a core operating system. Cross functional teams collaborate, processes are refined, and more advanced methods appear.

Typical signs:

- Dedicated experimentation rituals, such as weekly review sessions

- Standardised templates and playbooks

- More consistent engineering involvement

- Faster experiment setup and teardown

- Use of guardrail metrics to prevent harmful rollouts

- Increased experiment velocity

Benefits:

- Better quality insights

- Stronger alignment between product, data and engineering

- Reduced tech debt around testing

Stage 4: Optimisation engine

This is the level most SaaS companies aspire to. Experimentation becomes the default way of working and a key part of the company’s strategy.

Typical signs:

- Experiments running constantly across multiple teams

- Bandit algorithms or sequential testing for complex decisions

- Automated impact dashboards

- Large scale, cross product experiments

- Clear links between experimentation and financial outcomes

- A healthy culture that prioritises learning over being right

Benefits:

- Predictable improvements across core metrics

- Ability to respond quickly to market changes

- Strong competitive advantage

Types of product experiments

Different questions require different types of experiments. Each method has strengths, limitations and ideal use cases. Choosing the wrong one leads to poor results, wasted effort and misleading conclusions, so selecting the right format is critical. Below is a more complete look at the most effective experiment types used in modern SaaS teams, along with practical guidance on when to use each.

A/B testing

A B testing compares two versions of a specific experience, usually with one isolated change. It is best for high traffic areas where even small improvements can create measurable impact. A B tests work well for UI tweaks, funnel optimisations, onboarding flows and paywall experiments.

When to use it:

- You have one clear hypothesis

- You need statistically reliable comparisons

- You want to measure conversion, engagement or retention changes

- You can control exposure through tooling

When not to use it:

- Sample sizes are too small

- The experience has too many variables to isolate

Multivariate testing

Multivariate tests evaluate several elements at once, such as different headline and button combinations. They help teams understand not only what works, but how elements interact.

When to use it:

- You want to optimise layouts or messaging

- You have enough traffic to support multiple combinations

- You are comfortable with more complex analysis

Limitations:

- Requires significantly larger sample sizes

- Results become difficult to interpret without rigorous setup

Fake door testing

Fake doors present a feature before it exists. When users click or attempt to use the feature, the team tracks interest. This helps validate demand before investing in development.

When to use it:

- You want to test appetite for a new feature or add on

- You need fast directional insight

- Engineering resources are limited

Ethical considerations:

Always explain that the feature is not available yet. Transparency maintains user trust.

Dark launches

With dark launches, a feature is deployed to production but not visible to users. This allows teams to validate performance, reliability and system impact before exposing the feature to real customers.

When to use it:

- You need to test backend performance

- You want to identify integration risks early

- You want safety before enabling the feature publicly

Prototype testing

Prototype tests are powerful early in the product development cycle. Users interact with low or high fidelity prototypes so you can validate usability and intent before writing a line of code.

When to use it:

- You are exploring a new concept

- You want feedback before committing engineering time

- You need fast iteration cycles

Benefit:

Prevents teams from building complex features that fail usability tests.

Wizard of Oz experiments

In Wizard of Oz tests, a user interacts with a feature that appears automated, but a person handles the logic behind the scenes. This is ideal when testing complex ideas without building full functionality.

When to use it:

- You want to test workflows requiring intelligence or automation

- You are validating whether users rely on a potential future feature

- You want to de risk heavy engineering investment

Feature flag rollouts

Feature flags allow teams to release changes gradually. You can enable a feature for 1 percent of users, then 5 percent, then 20 percent. This reduces risk and helps teams detect unexpected behavior before full rollout.

When to use it:

- You want to control exposure and limit blast radius

- You want to test changes with specific cohorts

- You want to safely iterate on feature behavior

Feature flags are foundational for mature experimentation programs.

Bandit algorithms

Bandit algorithms optimise traffic allocation automatically. Instead of waiting for an experiment to complete, the algorithm gradually shifts more traffic to the better performing variant.

When to use it:

- You want to maximise conversions during an experiment

- You are working with many variants

- You operate in a dynamic environment with fast changing behavior

Benefit: Minimises opportunity cost by reducing traffic sent to poor performing options.

Holdout groups

Holdout groups are a small set of users who do not receive a feature or change. By comparing them to users who do get the feature, you can measure long term impact that short experiments often miss.

When to use it:

- You want to measure retention, engagement or monetisation effects over time

- You need to understand the true impact of a feature beyond immediate metrics

- You want a long term control group for product changes

Holdouts are particularly valuable in SaaS because many features create delayed effects that do not appear in short experiments.

| Experiment Type | Best For | When Not To Use | Traffic Requirement | Time Investment |

|---|---|---|---|---|

| A B Testing | • One clear hypothesis • UI tweaks, funnels, onboarding • Measuring conversion, engagement or retention | • Sample size too small • Too many variables to isolate | Medium to high | Medium |

| Multivariate Testing | • Optimising layouts or messaging • Testing multiple element combinations • Understanding interaction effects | • Low traffic • Teams unable to handle complex analysis | High | High |

| Fake Door Testing | • Validating demand for new features • Fast directional insight • Early prioritisation | • When transparency is not possible • When expectations could harm trust | Low | Low |

| Dark Launches | • Testing backend performance • Identifying integration risks early • Validating system impact | • When UX or frontend validation is needed | None for UX, medium for infra | Medium |

| Prototype Testing | • Exploring early concepts • Usability validation without engineering • Fast iteration | • When statistical reliability is needed | Low | Low |

| Wizard of Oz Experiments | • Testing workflows that appear automated • Validating automation heavy ideas • Reducing engineering investment | • When manual fulfilment is too complex • When scale cannot be simulated | Low to medium | Medium |

| Feature Flag Rollouts | • Controlled exposure • Testing with specific cohorts • Safe iteration before full rollout | • When tooling for flags or cohorts is not available | Low to medium | Low to medium |

| Bandit Algorithms | • Maximising conversions in real time • Handling many variants • Fast changing environments | • When long term measurement is required • When transparent results are needed | Medium to high | Medium |

| Holdout Groups | • Long term retention and monetisation impact • Understanding true feature effects over time • Maintaining a control group | • When the feature must apply to all users• When delayed impact is unacceptable | Low | High |

How to design a product experiment

A strong experiment is built on clarity, alignment and realistic expectations.

Define goal and success metric

Every experiment needs one primary metric. Secondary and guardrail metrics provide context and safety. For example, increasing onboarding completion is positive only if retention stays stable.

Write a strong hypothesis

A good hypothesis connects the user problem, the planned change and the expected outcome.

Example: If we simplify the sign up form, more users will complete onboarding because the process feels faster.

Identify audience and segmentation

Your experiment should target the right users. Segmentation prevents misleading results. For example, new users might react differently from long time users.

Define sample size and duration

Too few users leads to noisy results. Too long delays learning. You need confidence that the experiment has enough power to detect meaningful effects.

Build, launch and monitor

Use feature flags to control exposure. Monitor for unexpected drops in guardrail metrics to prevent negative outcomes.

Analyze results and make decisions

Look beyond significance. Practical significance matters more than academic thresholds. Even a small uplift may be impactful in high traffic flows.

Null or negative results are not failures. They are learning moments that help refine your roadmap.

Product experimentation for different business models

Experimentation varies depending on business model, audience and usage patterns.

SaaS freemium and PLG

Experiments often focus on activation, onboarding, paywall placement, education flows and habit building.

Examples:

- Testing the sequence of onboarding steps

- Adjusting trial length

- Pricing page experiments

- Improving product tours to speed up time to value

B2B enterprise

Enterprise SaaS has different constraints. Traffic is lower, sales cycles are longer and decisions are influenced by multiple stakeholders.

Effective experiment types include:

- Demo flow changes

- Lead qualification tests

- Email sequence experiments

- Proof of concept feature tests

Ecommerce or marketplace models

Here, experimentation is often tied to funnels, product rankings, merchandising and recommendations.

Examples:

- Cart flow optimisation

- Personalized recommendations

- Homepage layout tests

| Business Model | Focus Areas | Example Experiments |

|---|---|---|

| SaaS Freemium and PLG | • Activation and onboarding • Paywall placement • Habit building and product education • Speeding up time to value | • Testing onboarding step sequence • Adjusting trial length • Pricing page variations • Improving product tours |

| B2B Enterprise SaaS | • Low traffic, longer sales cycles • Multiple stakeholders • High touch interactions • Qualified pipeline quality | • Demo flow changes • Lead qualification experiments • Email nurture sequence tests • Proof of concept feature tests |

| Ecommerce or Marketplace | • Funnel efficiency• Product ranking and discovery • Merchandising performance • Recommendation accuracy | • Cart flow optimisation • Personalised recommendations • Homepage layout experiments |

Common pitfalls and how to avoid them

Many teams struggle because experimentation seems easy from the outside. In practice, there are predictable mistakes:

Underpowered tests: Running experiments without enough traffic leads to false conclusions.

Too many changes at once: Complex experiments make results impossible to interpret.

Misaligned metrics: Metrics must connect directly to user behavior and business outcomes.

Poor tooling or data quality: Bad data leads to broken decisions.

Confirmation bias and cherry picking: Teams often want a specific result. This undermines learning.

Shipping without learning: Experimentation without documentation prevents future teams from understanding what worked and why.

How to scale experimentation across your team

Scaling experimentation requires more than enthusiasm. It relies on structure, alignment and predictable routines that help teams move from scattered tests to a repeatable, cross functional capability. The goal is not to run more experiments for the sake of it, but to run better experiments that consistently inform the roadmap and improve product outcomes.

Introduce a standard workflow

A clear workflow ensures that every experiment follows the same path, regardless of who runs it. Define the steps from idea to analysis to decision, and document them in a simple, repeatable format. Your workflow should include:

- How ideas are submitted and prioritised

- How hypotheses are written

- What metrics define success

- Who is responsible for setup, QA and analysis

- How decisions are made and recorded

Templates reduce confusion and make experiments more consistent over time.

Create shared documentation

A central repository allows teams to learn from past successes and mistakes. It becomes an internal knowledge base that accelerates onboarding for new team members and prevents duplicated effort.

Your documentation should include:

- Hypotheses

- Design details

- Rollout decisions

- Final results

- Key learnings

- Follow up experiments or roadmap changes

The more transparent your records, the better the learning cycle becomes.

Build rituals

Rituals keep experimentation alive inside the organisation. Weekly or biweekly experiment reviews help maintain momentum, align teams and reinforce the value of evidence based decision making.

These sessions should:

- Review ongoing experiments

- Share early signals from guardrail metrics

- Discuss upcoming tests

- Highlight learnings from completed experiments

Rituals ensure experimentation becomes a habit, not a side project.

Improve collaboration between teams

Experimentation works best when it is shared, not siloed. Each function contributes something essential.

- Engineering ensures experiments are technically sound, performant and measured accurately.

- Design validates usability and ensures the changes support user needs.

- Analytics and data verify the metrics, sample size and statistical confidence.

- Marketing or growth help shape messaging and funnel alignment.

- Product holds the strategic context and makes final decisions.

When teams work together early, experiments move faster and deliver clearer insights.

Share results widely

Sharing results, even the ones that show no improvement, builds trust and transparency. It encourages teams to take thoughtful risks and learn, rather than fear being wrong.

Celebrate small wins and highlight what did not work. Create short experiment summaries that are easy for the entire organisation to consume. This creates psychological safety and reinforces a culture where learning matters more than ego.

Tools to support product experimentation

No experimentation program succeeds without the right supporting tools. You do not need a large or expensive stack, but you do need a combination of quantitative, qualitative and deployment tools that help you run experiments safely and learn from them. Each category below solves a different part of the experimentation puzzle, and the goal is to choose the tools that fit your maturity level, team size and technical resources.

A/B testing tools

A B testing tools allow teams to run classic experiments by comparing two or more variants of a page, component or flow. These tools help with traffic allocation, statistical analysis and experiment management.

What they are best for:

- UI changes

- Funnel optimisations

- Paywall tests

- Messaging and layout decisions

- Experiments with a single primary metric

Recommended tools:

- Optimizely, enterprise level, strong feature set

- VWO (Visual Website Optimizer), flexible and easy for non technical teams

- Google Optimize alternatives like Convert or AB Tasty

- Split.io, also offers deeper engineering focused features

These tools suit teams in Stage 2 and higher of experimentation maturity.

Analytics platforms

Analytics platforms provide the foundation for understanding user behavior, conversions, retention and product engagement. Without reliable analytics, experiment results become noisy or misleading.

What they are best for:

- Tracking events and funnels

- Measuring retention and usage patterns

- Identifying friction points

- Supporting hypothesis generation

- Running cohort and segmentation analysis

Recommended tools:

- Amplitude, ideal for product analytics

- Mixpanel, easy to adopt with strong funnel analysis

- Heap, auto capture events for teams with limited engineering capacity

- Pendo, valuable for in app analytics and product guidance

Analytics tools are essential starting at Stage 1 and become critical from Stage 2 onward.

Feature flag systems

Feature flags allow teams to control who sees what, without deploying separate versions of code. They are the backbone of safe experimentation because they reduce risk, provide rollback controls and enable gradual exposure.

What they are best for:

- Safe, staged rollouts

- Testing backend changes

- Running dark launches

- Controlling experiments for specific cohorts

- Reducing the blast radius of new features

Recommended tools:

- LaunchDarkly, the market leader in feature flagging

- Flagsmith, open source and flexible

- Split.io, combines flagging with experimentation features

- ConfigCat, affordable and simple for smaller teams

Feature flags become essential starting at Stage 3 of experimentation maturity.

UX research tools

UX research tools complement quantitative data by giving teams qualitative insights, such as how users think, feel and behave. They reveal friction points that metrics alone cannot explain.

What they are best for:

- Usability testing

- Prototype testing

- Gathering user feedback early

- Understanding motivation and mental models

- Supporting experiment analysis with context

Recommended tools:

- Figma prototypes, for quick early testing

- UserTesting, moderated and unmoderated studies

- Maze, lightweight prototype and concept testing

- Lookback, live user sessions with recordings

- Hotjar, heatmaps and session recordings

These tools support all stages of experimentation and are especially powerful in early hypothesis validation.

Choosing the right stack for your maturity level

Your experimentation stack does not need to be complicated. Instead, match your tools to your needs.

If you are early (Stage 1):

- One analytics tool

- One prototype or UX testing tool

- Optional lightweight A B testing tool

If you are building repeatability (Stage 2):

- A B testing tool for front end changes

- A reliable analytics platform

- Basic feature flags

If you are scaling (Stage 3):

- Advanced A B or experimentation platform

- Engineered feature flag system

- UX research tool

- Centralised experiment documentation

If you are optimising at scale (Stage 4):

- Integrated experimentation platform

- Automated traffic allocation or bandit systems

- Full feature flag infrastructure

- Real time analytics and dashboards

Each tool type supports a different layer of your experimentation engine. The goal is not to buy every tool available but to build a stack that enables safe rollouts, fast learning and high confidence in your decisions.

When your team needs help from a fractional CPO

Experimentation often breaks down because teams lack strategic guidance, not enthusiasm.

You may need support when:

- Experiments deliver unclear or contradictory results

- Tests are inconsistent and lacking structure

- There is no experimentation roadmap

- Teams disagree on metrics or interpretation

- There is little alignment between product, data and engineering

- You want to scale experimentation but lack leadership to build the system

- You want experiments to drive real business outcomes, not just UI tweaks

A fractional CPO provides the strategic oversight, processes and leadership needed to turn scattered tests into a powerful operating system.

Strengthen your experimentation practice with fractional CPO support

If your team wants to scale product experimentation, improve experiment quality, or create a culture of evidence based decision making, fractional CPO support can help. You get senior level product leadership without the cost of a full time executive, along with the systems and strategy needed to run experiments that actually move your metrics.

Work with a fractional CPO to build a product experimentation engine that drives predictable growth.

FAQ’s

What is product experimentation?

Product experimentation is a structured way to test product changes with real users so you can learn what actually improves engagement, activation and retention. Instead of relying on assumptions, teams run controlled experiments to gather evidence and make confident decisions.

Why is product experimentation important for SaaS companies?

SaaS products evolve quickly. Experimentation helps teams reduce risk, validate ideas early and avoid building features users do not need. It creates a predictable way to improve activation, conversion and long term retention using real user behavior instead of guesses.

How do I choose the right type of experiment?

The choice depends on your hypothesis, traffic levels and risk tolerance. A B tests work for simple changes with high traffic. Prototypes are best for early exploration. Feature flag rollouts help you ship safely, and fake door tests help validate demand before building anything. Match the experiment type to the question you are trying to answer.

How long should a product experiment run?

It depends on your sample size, traffic levels and the metric you are measuring. Most experiments run between one and four weeks. The goal is to reach statistical confidence without running so long that external factors distort the results.

What metrics should I track during an experiment?

Every experiment should have one primary metric, such as onboarding completion or trial to paid conversion. Secondary metrics provide context, and guardrail metrics help you detect negative side effects. The metrics should reflect the user behavior you want to improve.

Sivan Kadosh is a veteran Chief Product Officer (CPO) and CEO with a distinguished 18-year career in the tech industry. His expertise lies in driving product strategy from vision to execution, having launched multiple industry-disrupting SaaS platforms that have generated hundreds of millions in revenue. Complementing his product leadership, Sivan’s experience as a CEO involved leading companies of up to 300 employees, navigating post-acquisition transitions, and consistently achieving key business goals. He now shares his dual expertise in product and business leadership to help SaaS companies scale effectively.