Your “AI Strategy” is Just a Feature Wrapper (And Investors Know It)

December 29, 2025 • 8 min read

Last Updated on February 27, 2026 by Sivan Kadosh

Your board is demanding an AI moat. Your competitors are shouting about “Agentic Workflows” on LinkedIn. And your engineering team just spent three weeks pasting an OpenAI API key into a chat window to launch a “chat-with-your-data” feature.

That is not a strategy. It is a feature wrapper.

In 2024, you could get away with it. The novelty of LLMs was enough to drive a bump in expansion revenue or a “Series A” deck. But in 2026, the honeymoon is over. Investors have sharpened their knives. They are looking past the “AI-powered” badge on your marketing site and digging into your data architecture.

If a competitor, or a weekend hacker, can copy your entire AI value proposition over a long weekend, your valuation isn’t just at risk. It is already collapsing.

Key takeaways

- The Wrapper Trap: Relying on third-party LLMs without proprietary data or deep workflow integration creates zero long-term enterprise value.

- The Intelligence Paradox: As models (GPT, Claude, Gemini) get smarter, the value of a simple interface on top of them trends toward zero.

- Defensibility Layers: Real moats are built on proprietary data loops, vertical-specific context, and “System of Action” architecture.

- Unit Economic Disaster: Without a plan to move from expensive frontier models to smaller, task-specific models, your gross margins will vanish.

The intelligence paradox: Why “Better Models” are killing your product

There is a fundamental misunderstanding in SaaS boardrooms today. Founders think: “When GPT-5 or Claude 4 comes out, my product will get better automatically.”

That is true, but it is also true for everyone else.

If your product’s primary value is “summarizing documents” or “writing emails,” and the underlying model gets 20 percent better at those tasks, your competitive advantage doesn’t grow. It shrinks. Why? Because the “delta” between what a user can do for free inside the ChatGPT interface and what they can do in your $50/month app has narrowed.

This is the intelligence paradox: The more powerful the base models become, the less valuable a simple UI layer becomes. To survive, you have to solve problems that the model cannot solve on its own, no matter how “smart” it gets.

Stop guessing. Start calculating.

Access our suite of calculators designed to help SaaS companies make data-driven decisions.

Free tool. No signup required.

Confession time: I admit it – I was wrong. I didn’t see the AI tsunami coming, or at least not with the force that it hit us (and continues to hit us). At first, I thought it was just buzz, a passing trend. I failed to foresee the seismic impact it would have on my world and my clients. OpenAI triggered an earthquake, and the resulting tsunami washed away countless startups – companies with great ideas and excellent execution. It’s heartbreaking, but as the saying goes: Así es la vida (that sounds better in Spanish).

When I dove deep into the research for this article, the findings were unequivocal. In viral threads across leading communities like r/SaaS and r/YCombinator, founders now refer to OpenAI announcement days as “The Extinction Event.” I encountered a painful, documented case of a founder who built a “Chat with PDF” tool generating a steady $20k MRR. The moment OpenAI released this feature for free to ChatGPT Plus users, his traffic plummeted by 90% in a single weekend.

This is the new economic reality, and even the biggest investors are pointing it out. In a seminal article by Andreessen Horowitz (a16z) titled “Who Owns the Generative AI Platform?”, they emphasize that most of the value in the AI food chain is accruing to infrastructure providers, not the wrapper applications on top.

Furthermore, even the survivors are bleeding. Sequoia Capital calls this “The $600 Billion Question”: the massive gap between infrastructure investment and actual revenue. Founders on Reddit report burning thousands monthly on API costs (tokens) while their LTV erodes. The conclusion from my research is clear: If your strategy can be wiped out by a single software update from Sam Altman, you don’t have a company – you have a temporary feature.

The 2026 due diligence reality check

The questions you will hear in your next fundraising round have changed. VCs have been burned by “AI-first” startups that were effectively just marketing firms for OpenAI. They are now looking for “Model Agnostic Defensibility.”

They will ask:

- The Swap Test: “If I swap your OpenAI API for an open-source Llama model tomorrow, does the product still work? Does it still have an advantage?”

- The Incumbent Risk: “Why won’t Salesforce, HubSpot, or Microsoft add this exact feature as a free toggle next month?”

- The Data Flywheel: “Does your AI get better because your customers use it, or does it only get better when Sam Altman releases an update?”

If your answer relies on the “cleverness” of your prompts, you don’t have a moat. You have a temporary head start.

Building a real moat: The four layers of defensibility

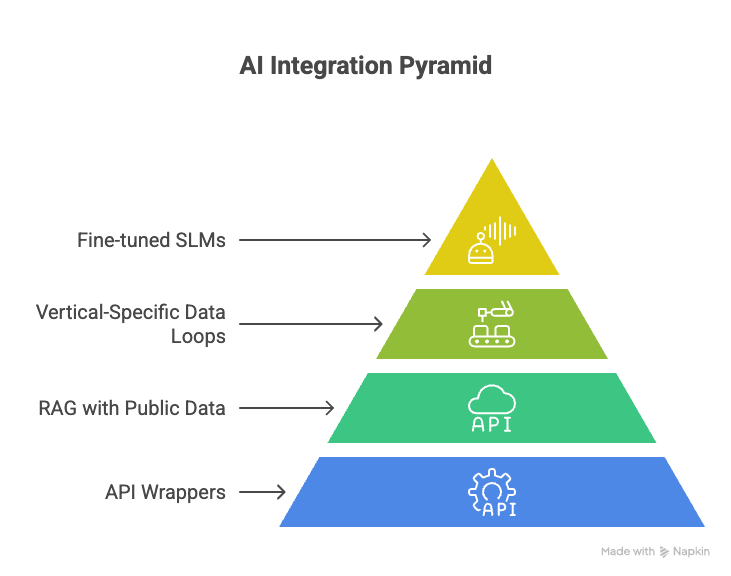

To move away from being a “feature wrapper,” you must architect your product across four specific layers.

1. The context layer (Vertical-specific RAG)

Retrieval-Augmented Generation (RAG) is the baseline for 2026. But “Chat with your PDF” is the bottom of the barrel. Real context comes from connecting to the unstructured data that lives in the cracks of your customers’ businesses.

If you are a construction SaaS, your moat isn’t an AI that reads contracts. It is an AI that connects contracts to real-time weather data, supply chain delays, and historical labor costs from 500 previous projects. That is “Vertical Context,” and it is incredibly hard for a horizontal player like Microsoft to replicate.

2. The workflow layer (System of Action)

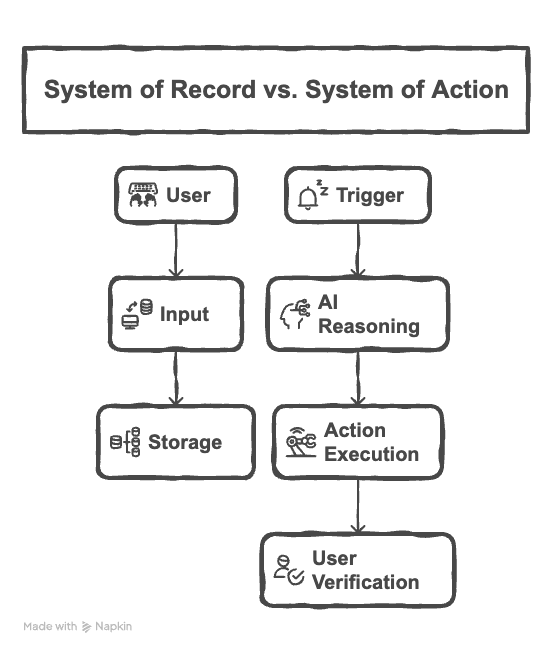

Most SaaS tools are “Systems of Record”, they are fancy filing cabinets for data. Investors value “Systems of Action.”

A feature wrapper tells a user: “Here is a draft of an email.” An AI-Native product says: “I noticed this invoice is 10 days late, the client’s payment terms just changed, and their CFO is out of office. I’ve drafted a follow-up, adjusted the cash flow forecast, and put a hold on their next shipment. Click here to approve.”

The AI isn’t an assistant; it is a specialized worker integrated into the core workflow of the business.

3. The data flywheel layer

Your strategy must include a “Reinforcement Learning from User Feedback” (RLHF) loop. Every time a user accepts, rejects, or edits an AI suggestion, that data should be used to improve the system for that specific customer or industry.

When your product becomes a “living organism” that learns the nuances of a specific company’s brand voice or technical constraints, it becomes “un-churnable.” The cost of switching to a competitor isn’t just a data migration fee; it is the cost of losing a system that “knows” how the company works.

4. The proprietary model layer (The end-game)

Eventually, the most defensible SaaS companies will move away from paying “The Big Three” for every single token. They will use frontier models to label data, and then fine-tune smaller, open-source models (like Mistral or Llama 3) for specific tasks.

Owning your weights is the ultimate moat. It improves latency, protects privacy, and, most importantly, slashes your inference costs.

There is a dirty secret in the industry right now: AI features are a margin killer.

In the traditional SaaS model, your gross margins should be 80 percent or higher. But if you are routing every user interaction through GPT-4o, your “cost of goods sold” (COGS) isn’t just server hosting; it is a massive, variable API bill.

A fractional CPO looks at this through a business lens, not just a technical one. We implement Inference Optimization:

- Model Routing: Using “cheap” models for simple tasks (summarization, formatting) and “expensive” models only for complex reasoning.

- Semantic Caching: If three users ask the same question, the system shouldn’t pay to “think” three times. It should serve the cached AI response.

- Small Language Models (SLMs): Moving tasks to tiny, specialized models that can run on a fraction of the hardware.

| Strategy | Feature Wrapper Approach | Fractional CPO Strategy |

| Model Selection | Uses GPT-4 for everything (expensive). | Routes simple tasks to cheaper models (Llama/Haiku). |

| Data Processing | Sends entire documents per query. | Uses vector chunks and semantic caching to reduce tokens. |

| Unit Economics | Costs scale linearly with usage. | Costs flatten as you optimize and fine-tune. |

| Margin Impact | 60-70% Gross Margins. | 80%+ Gross Margins. |

If you don’t have a plan for unit economic optimization, your “AI growth” will eventually lead to a “Margin Death Spiral.”

Strategic Boredom: Why your CTO can’t do this alone

Your CTO is likely focused on the “how”: the latency, the vector DB, the API integration. But a CPO focuses on the “why” and the “what.”

Building an AI strategy is a product problem, not a dev problem. It requires understanding the customer’s “Jobs to be Done” so deeply that you can identify where AI provides a transformative benefit rather than just a cool gimmick.

The roadmap to a defensible AI strategy

If you are currently sitting on a feature wrapper, don’t panic. But you do need to pivot. Here is the 90-day roadmap we implement for our clients:

Day 1-30: The Audit Identify every AI feature. Which of these could a competitor copy in a week? Which of these depend entirely on the model being “smart”?

Day 31-60: Context Injection Identify the proprietary data sources you have access to. How can we feed that data into the AI to provide answers that ChatGPT literally cannot know?

Day 61-90: Action Integration Move the AI from a “chat box” on the side to a “trigger” in the middle of the workflow. Make it do things, not just say things.

Order in the age of AI chaos

The “Gold Rush” phase of AI is ending. The “Infrastructure” phase is beginning.

Investors aren’t looking for more “AI-powered” apps. They are looking for the next generation of category-defining software that uses AI as a core architectural component, not a marketing sticker.

At SaaS Fractional CPO, we specialize in helping founders transition from “AI wrappers” to “AI moats.” We bring the frameworks of a $100M company to your $5M or $10M SaaS, ensuring your next fundraising round isn’t just about hype, it’s about a defensible, scalable engine.

Additional resource: Stop Hiring Full-Time CPOs at $5M ARR: The Economic Case for Fractional Leadership

Stop building on borrowed intelligence.

Sivan Kadosh is a veteran Chief Product Officer (CPO) and CEO with a distinguished 18-year career in the tech industry. His expertise lies in driving product strategy from vision to execution, having launched multiple industry-disrupting SaaS platforms that have generated hundreds of millions in revenue. Complementing his product leadership, Sivan’s experience as a CEO involved leading companies of up to 300 employees, navigating post-acquisition transitions, and consistently achieving key business goals. He now shares his dual expertise in product and business leadership to help SaaS companies scale effectively.